Discover DeepSeek

This Free AI Model is Changing the Game

In a world where AI tools are becoming indispensable, DeepSeek has emerged as a global sensation. From developers to creative professionals, users worldwide are flocking to this open-source marvel for its unparalleled efficiency, versatility, and privacy-first design. Launched just a few years ago, DeepSeek has rapidly grown into a powerhouse, thanks to its innovative architecture and commitment to accessibility. Its rise is not just a story of technological brilliance but also a testament to the growing demand for AI that prioritizes user control and transparency.

Why is DeepSeek Making Waves?

While models like GPT-4 and Claude dominate the AI conversation, DeepSeek stands out with its unique blend of features:

|

Feature |

DeepSeek |

GPT-4 |

Claude |

Gemini |

|

Open-Source |

Yes |

No |

No |

No |

|

Free to Use |

Yes |

Paid |

Limited Free Tier |

Paid |

|

Local Deployment |

Yes |

No |

No |

No |

|

Multimodal Support |

Yes |

Yes |

No |

Yes |

|

Lightweight Design |

Yes |

No |

No |

No |

Features: DeepSeek vs. Mainstream LLMs

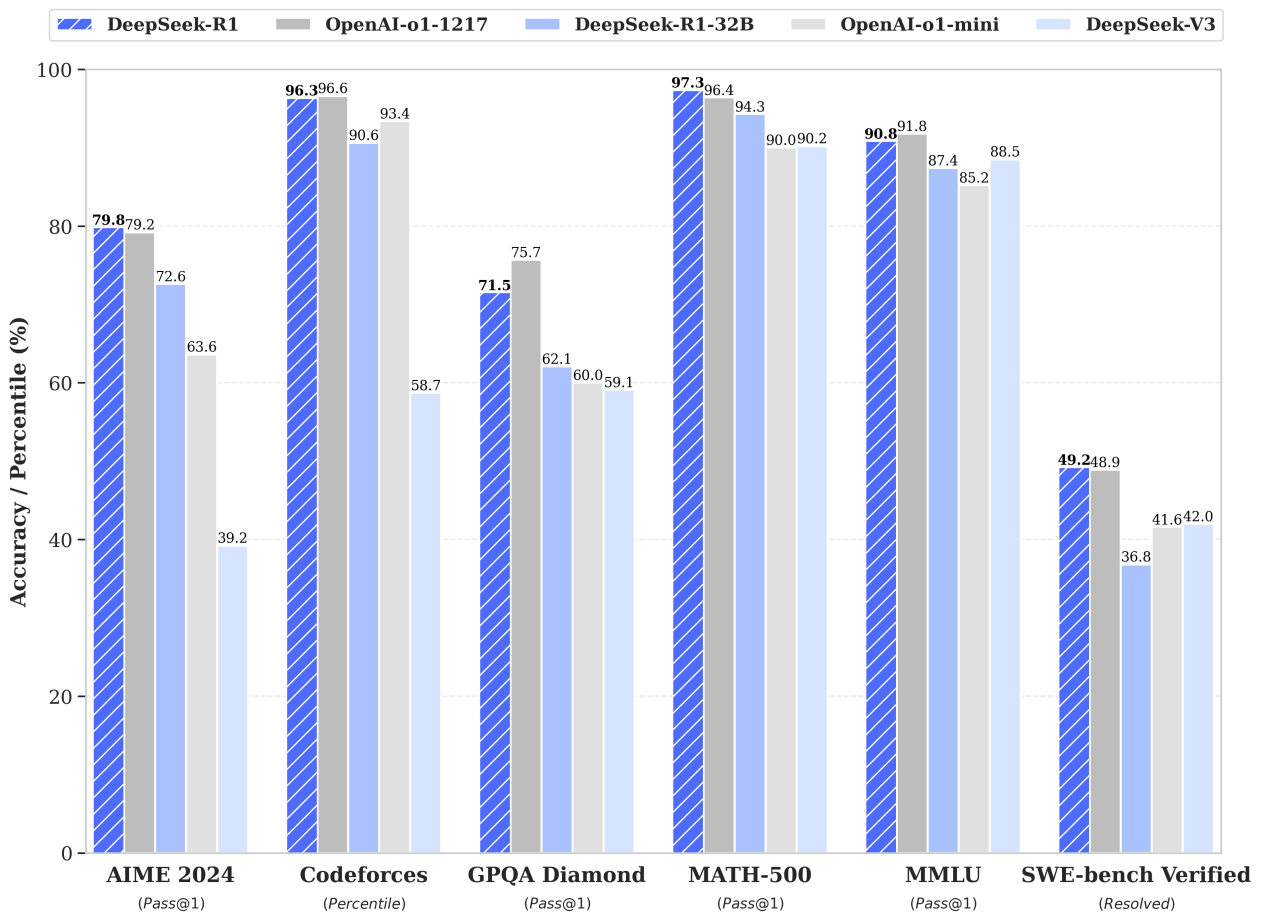

As you can see, DeepSeek's open source and free features are a major reason for its popularity. However, even though it is free, its performance is still comparable to OpenAI's high-performance AI model. The figure below shows the performance comparison between DeepSeek-R1 and OpenAI-o1.

Performance: DeepSeek vs. OpenAI-o1

Source: @deepseek_ai on X.com

Of course, another important point is that the training cost of DeepSeek is very low compared to large models such as OpenAI. The training cost of AI models is very important for the future development of AI, and lower costs mean faster development.

Getting Started with DeepSeek

Register and Access

Getting started with DeepSeek is incredibly easy. Simply sign up on the DeepSeek website to access its online services, including Internet-connected features and Deep Thinking mode. For on-the-go access, download the DeepSeek mobile app, available for both iOS and Android.

Mastering Prompts

A prompt is essentially the input you provide to an AI model to guide its output. Think of it as a question or instruction that tells the AI what you need. The quality of your prompt directly impacts the quality of the AI's response.

-

Why are prompts important? A well-crafted prompt can unlock the full potential of DeepSeek, enabling it to generate highly accurate, relevant, and creative outputs. Whether you're summarizing a document, writing code, or planning a trip, the right prompt ensures the AI understands your intent and delivers the best possible result.

-

To help users master prompt engineering, DeepSeek offers an official Prompt Library, where you can find examples and templates for various tasks.

Core Features and Use Cases

DeepSeek excels in a wide range of applications, making it a versatile tool for both professionals and casual users. Here are some of its standout capabilities:

1. Text Generation and Understanding:

DeepSeek can analyze, summarize, and generate text with remarkable accuracy. Whether you're drafting emails, creating reports, or extracting insights from lengthy documents, DeepSeek delivers results in seconds.

● Prompt: "Summarize the key points of this article: [Insert Article Text]"

● Output: A concise, accurate summary tailored to your needs.

2. Code Generation and Optimization:

Developers can leverage DeepSeek to write, debug, and optimize code faster than ever before. It supports multiple programming languages and can even explain complex code snippets in plain English.

● Prompt: "Write a Python function to calculate the Fibonacci sequence."

● Output: A clean, efficient code snippet ready for use.

3. Personalized Travel Planning:

Planning a trip? DeepSeek can help you create a detailed itinerary based on your preferences, budget, and schedule.

● Prompt: "Plan a 5-day trip to Japan, including Tokyo and Kyoto. I enjoy history, food, and nature."

● Output: A day-by-day itinerary with recommendations for historical sites, local cuisine, and scenic spots.

Getting started with DeepSeek is incredibly easy. Simply sign up on the DeepSeek website to access its online services, including Internet-connected features and Deep Thinking mode. For on-the-go access, download the DeepSeek mobile app, available for both iOS and Android.

A prompt is essentially the input you provide to an AI model to guide its output. Think of it as a question or instruction that tells the AI what you need. The quality of your prompt directly impacts the quality of the AI's response.

-

Why are prompts important? A well-crafted prompt can unlock the full potential of DeepSeek, enabling it to generate highly accurate, relevant, and creative outputs. Whether you're summarizing a document, writing code, or planning a trip, the right prompt ensures the AI understands your intent and delivers the best possible result.

-

To help users master prompt engineering, DeepSeek offers an official Prompt Library, where you can find examples and templates for various tasks.

DeepSeek excels in a wide range of applications, making it a versatile tool for both professionals and casual users. Here are some of its standout capabilities:

1. Text Generation and Understanding:

DeepSeek can analyze, summarize, and generate text with remarkable accuracy. Whether you're drafting emails, creating reports, or extracting insights from lengthy documents, DeepSeek delivers results in seconds.

● Prompt: "Summarize the key points of this article: [Insert Article Text]"

● Output: A concise, accurate summary tailored to your needs.

2. Code Generation and Optimization:

Developers can leverage DeepSeek to write, debug, and optimize code faster than ever before. It supports multiple programming languages and can even explain complex code snippets in plain English.

● Prompt: "Write a Python function to calculate the Fibonacci sequence."

● Output: A clean, efficient code snippet ready for use.

3. Personalized Travel Planning:

Planning a trip? DeepSeek can help you create a detailed itinerary based on your preferences, budget, and schedule.

● Prompt: "Plan a 5-day trip to Japan, including Tokyo and Kyoto. I enjoy history, food, and nature."

● Output: A day-by-day itinerary with recommendations for historical sites, local cuisine, and scenic spots.

Advanced Use of DeepSeek

● Local Deployment

Deploying DeepSeek locally offers three major advantages:

1. Privacy and Security:

Your data never leaves your device.

-

2. Independence from Cloud Servers:

No reliance on external infrastructure. Say goodbye to the “DeepSeek Server Is Busy” error.

-

3. Offline Functionality:

Use DeepSeek without an internet connection.

● Hardware Requirements

DeepSeek's models are designed to be lightweight and efficient, but hardware requirements vary depending on the model size:

|

DeepSeek Model Version |

Parameters |

Characteristics |

Use Cases |

Hardware Configuration |

|

1.5B |

Lightweight model with fewer parameters and smaller size |

Suitable for lightweight tasks such as short text generation and basic Q&A |

4-core CPU, 8GB RAM, no discrete GPU required |

|

|

7B |

Balanced model with good performance and moderate hardware requirements |

Ideal for medium-complexity tasks like copywriting, table processing, and statistical analysis |

8-core CPU, 16GB RAM, Ryzen 7 or higher, RTX 3060 (12GB) or higher |

|

|

14B |

High-performance model, excels at complex tasks like mathematical reasoning and code generation |

Capable of handling complex tasks such as long text generation and data analysis |

i9-13900K or higher, 32GB RAM, RTX 4090 (24GB) or A5000 |

|

|

DeepSeek-R1-32B |

32B |

Professional-grade model with powerful performance, suitable for high-precision tasks |

Ideal for large-scale tasks like language modeling, large-scale training, and financial forecasting |

Xeon 8-core, 128GB RAM or higher, 2-4x A100 (80GB) or higher |

|

70B |

Top-tier model with the strongest performance, suitable for large-scale computation and highly complex tasks |

Designed for high-precision professional tasks, such as multimodal task preprocessing. Requires high-end CPUs and GPUs, suitable for enterprises or research institutions with ample budgets |

Xeon 8-core, 128GB RAM or higher, 8x A100/H100 (80GB) or higher |

|

|

DeepSeek-R1-671B |

671B |

Ultra-large-scale model with exceptional performance and fast inference, suitable for extremely high-precision demands |

Ideal for national-level or ultra-large-scale AI research, such as climate modeling, genomic analysis, and general artificial intelligence exploration |

64-core CPU, 512GB RAM or higher, 8x A100/H100 |

Among them, the 671B version is the complete version of the DeepSeek-R1 model, and the other smaller-scale ones are its distillation models.

For casual users, the 14B model provides an excellent experience without requiring top-tier hardware. If you're using an AMD Ryzen™ AI series chip, check out this community guide for optimized deployment without needing a discrete graphics card.

|

Processor |

DeepSeek R1 Distill* (Max Supported) |

|

AMD Ryzen™ AI Max+ 395 32GB1, 64 GB2 and 128 GB |

DeepSeek-R1-Distill-Llama-70B (64GB and 128GB only) |

|

AMD Ryzen™ AI HX 370 and 365 24GB and 32 GB |

DeepSeek-R1-Distill-Qwen-14B |

|

AMD Ryzen™ 8040 and Ryzen™ 7040 32 GB |

DeepSeek-R1-Distill-Llama-14B |

*= AMD recommends running all distills in Q4 K M quantization.

1= Requires Variable Graphics Memory set to Custom: 24GB.

2= Requires Variable Graphics Memory set to High.

The Future of AI is Open and Local

DeepSeek represents more than just a technological breakthrough—it's a movement toward democratizing AI. By making powerful tools open-source and accessible, DeepSeek is empowering individuals and organizations to innovate without barriers.

As AI continues to evolve, we can expect it to become even more lightweight, personalized, and integrated into our daily lives. Here's how you can start using AI like DeepSeek to enhance your work and life:

● Work: Automate repetitive tasks, generate reports, or debug code faster.

● Life: Plan trips, create personalized content, or even learn new skills with AI-powered tutoring.

Try DeepSeek today and join the revolution. If you happen to have a PC powered by an AMD Ryzen™ AI chip, such as the AI 370 or AI X1 Pro from Minisforum, then deploying DeepSeek locally would be an interesting and novel option.

Originally published by: Minisforum JP